What the fish is Edge Computing?

Understanding what edge computing is, with examples.

The IT kingdom is expanding at a rate of knots, faster than it was growing before the pandemic hit. More people are using phones and IoT devices with internet capabilities. We see 4G being adopted even in under-developed countries, and the developed and the developing world are preparing for the 5G advent. The massive expansion of user-created and organizational data is the new hurdle the IT crowd is battling these days. Businesses can no longer keep up with the pace of change if they are not ready to be flooded with data and possess the ability to process them efficiently. While the cloud once promised to offer everything businesses need, it no longer holds that promise.

While it is true that there is no substitute for the cloud in the near future (unless you are a believer of web3, WAGMI!), it is also true that by storing essential data outside your organization's premises, you are inviting hackers to steal that data. It is also true that by transferring terabytes of data every day to and from your cloud service provider, you are tightening your belt.

So, if you are not adopting to the edge, your online business will most definitely fail.

Okay, that statement is not entirely true. Edge computing is still in its very early stages of adoption, and your business need not make a switch just yet. The IoT industry is the primary target of the Edge computing space. But before I explain the whys and the hows, check out this excellent business idea.

Imagine having a DVD player that sends data from a disk to a server in some other continent for decoding and sends a playable stream of data that could be displayed on your television. Sounds futuristic and ludicrous at the same time, right? You buy the DVD player, buy the disk that has the movie you want to watch, and also pay for the monthly subscription charges for the online video codec. Think about what purpose the DVD player plays? Just send and receive data from the servers? Could I have made the DVD player any dumber than this?

Look around you before you think this is dumb and foolish and want to file a lawsuit against me. There probably might be an equally dumb device sitting around you. Does it look something like this?

Think about it. Your Alexa device is just a speaker that connects to the internet for data transfer. When you ask Alexa something, it compresses your command and sends it wherever the data is being processed, making a few API calls to fetch the appropriate data and then sending the response voice to the device to play. Does my idea sound foolish now?

That might not have been a compelling enough reason for the device being dumb for most of you. Well, there are three significant issues related to the current model.

What benefits does edge computing offer?

Latency: Let's take an example. You want to order Pizza, but there is no phone in your house. The only phone that can be used is in your office, 5 kilometers away. So to have pizza with your family, you would have to take everyone's order, drive to your office, repeat the order to the receptionist, who in turn will call the pizzeria, wait for the delivery agent to give you the pizza in your office and then finally take it back to your home and distribute it. See the problem? Wouldn't it have been better if you had a phone for yourself? You could have saved a lot of time. This example accurately depicts what smart assistant devices are. They do not have their own "phones." If you ask it something, it has to "drive to its office"(Amazon servers/Google servers/Apple servers) to make a request. This, in turn, causes latency, which results in poor user experience. Suppose the smart assistant devices could make API calls independently after analyzing the voice input. In that case, the delay in response could have been reduced.

Security and Privacy: Would you be comfortable making calls to your loved ones in front of a stranger every time? Your smart assistant devices are always listening to whatever is being spoken around them; that's why they can pick up the wake-up word anytime. What's to say they are not sending everything to their servers, even when they are not being spoken to? I am not saying that they do, but who knows? If it was possible to parse the speech at the point of origin, security could be increased drastically.

Bandwidth: When everything is processed at the server, everything generated at the origin needs to be sent to the server. This requires a lot of bandwidth. You might argue that a voice sample transferred might not be much, correct, but we need a different example here. IoT devices are not just limited to these smart speakers. For instance, a vast compound with about 20 high-definition surveillance cameras installed that a security firm is monitoring. All the data that each of the 20 cameras captures are being sent to the security firm for analysis over the internet. A camera of 1080p resolution takes about 35 GB on average per day. For a 20-camera network, this would be close to 700GB per day. That's a lot of bandwidth you would be dedicating to cameras. What if the cameras transmit feed to the firm only when they detect any movement? That would be a lot of data saved, wouldn't it?

Gosh!!! Would you tell me what it is???

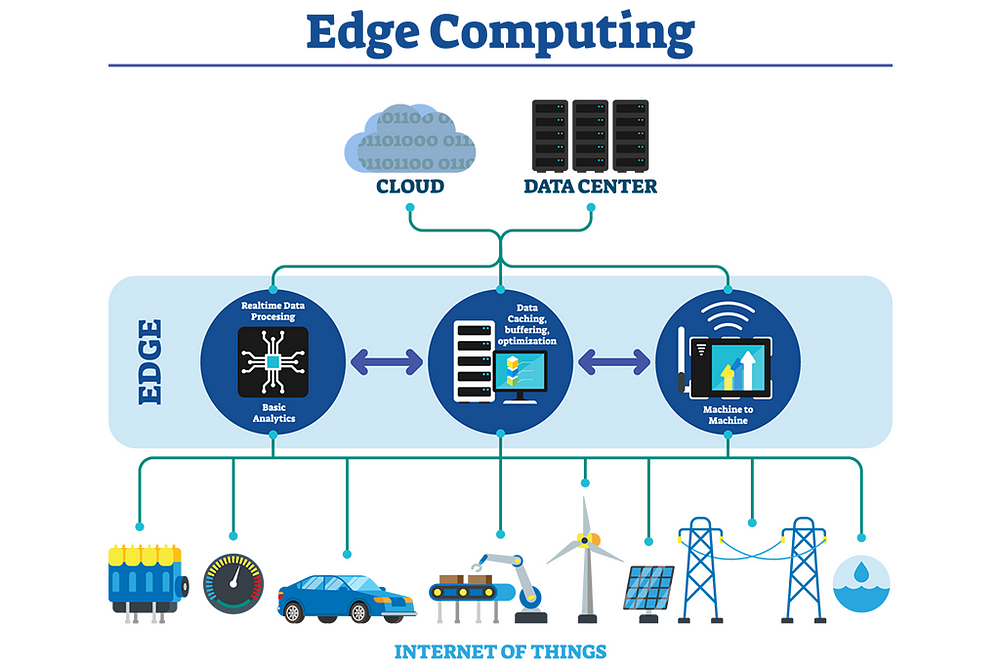

Now that you know the problem with the current system let me bring to your attention what edge computing brings to the table. Edge computing is not as obtuse as you may have anticipated. It is not replacing the cloud; it brings the cloud to you. Don't get it? Let me simplify it for you.

Remember the smart assistant example we just talked about? Since the voice sample is being sent to the servers, it causes latency and privacy concerns (that is secondary in this case, focus on latency). Edge computing is all about placing computational power close to the source of data generation or at the "edge of the network," as it is called. So, for example, your Alexa device can have the ability to recognize the said command on its own and make various API calls autonomously without bothering the centralized servers every time. The latency would be significantly reduced. But this is not just some pretend-talk. Amazon has already been working on these edge devices and has developed a powerful chip that can handle voice recognition.

Another use case can be found in a self-driving car. Autonomous cars need to act on data from their sensors in real-time. It cannot wait for a response from the servers to make a turn. What if there is a connectivity issue? What if the instruction received is too late? This can all be tackled by adding edge devices to the car, which can make decisions by analyzing real-time data from all the sensors in the vehicle and sending periodic information to the data center.

A smart farm that monitors the health of crops need not transfer data 24x7. Installed edge devices can check the temperature and automatically increase or decrease the temperature after sensors report the current temperature. Upload of necessary data can be done every once in a while.

Are there downsides to Edge-computing?

Adding more devices that handle sensitive information can increase the number of vulnerable points for hackers to exploit.

Adding more hardware to the network would require increased capital investments.

Maintenance costs will shoot up because of added infrastructure

It would be crucial for the development teams to design the edge equipment so that it discards only irrelevant data without bleeding crucial data needed for further analysis.

TL;DR

To summarize, edge computing essentially places powerful devices closer to the edge of the network (end-user devices) that perform analysis on real-time data and give results and only send necessary data to the centralized servers. It's all about bringing the cloud closer to you without you even noticing it. It is still in very early phases, and worldwide availability of edge services is far, but it is forecasted that the edge-computing industry will become a multi-billion dollar industry by 2028.